AMD is using CES 2026 to spotlight major momentum behind ROCm, its open-source GPU computing software stack built as a flexible alternative to proprietary AI and compute platforms. Originally known as the Radeon Open Compute Platform (it’s no longer treated as an acronym), ROCm has long been closely associated with high-performance computing and datacenter-class AMD hardware, including Instinct accelerators and Radeon Pro products. Now, it’s expanding faster into mainstream AI development and creator workflows across more devices and operating systems.

At its core, ROCm is the behind-the-scenes toolkit that makes AMD GPUs usable for serious compute jobs. It bundles the key pieces developers need—runtimes, compilers, libraries, and performance tools—so workloads can scale from low-level GPU kernels all the way up to popular end-user applications. That reach is why ROCm is increasingly tied to AI model training and inference, demanding HPC tasks like scientific simulation and data analysis, and other general-purpose GPU computing workloads that have nothing to do with graphics rendering.

Recent ROCm releases are also helping AMD hardware post standout results in generative AI, including text-to-video. AMD says ROCm 7.1 has already shown strong performance with models like LTX Video 0.9 and Wan 2.2, with completion times as quick as about three minutes on Ryzen AI Max+ APUs and around 45 seconds on Radeon AI Pro GPUs, depending on the workload and configuration.

With ROCm 7.2, AMD is pushing compatibility further by adding support for the newest Ryzen AI 400 series APUs on both Windows and Linux. That’s important for anyone who wants to run modern AI tools locally—whether for image generation, video generation, experimentation, or building production pipelines—without being locked to a single OS. AMD also notes that a ComfyUI build integrating ROCm 7.2 is on the way from the ComfyUI team, and ROCm 7.2 can be installed alongside the Adrenalin 26.1.1 software stack to streamline setup for supported systems.

Windows support is another key turning point. ROCm first brought official Windows support in version 7.1, and AMD positioned that release as a major leap forward, claiming up to a 5x performance uplift compared to ROCm 6.4.4. On Ryzen Max APUs specifically, AMD reports notable gains for popular generative AI workloads, including 2.6x faster performance in Stable Diffusion XL (SDXL) and 5.2x faster performance in Flux S when moving to ROCm 7 versus the prior version.

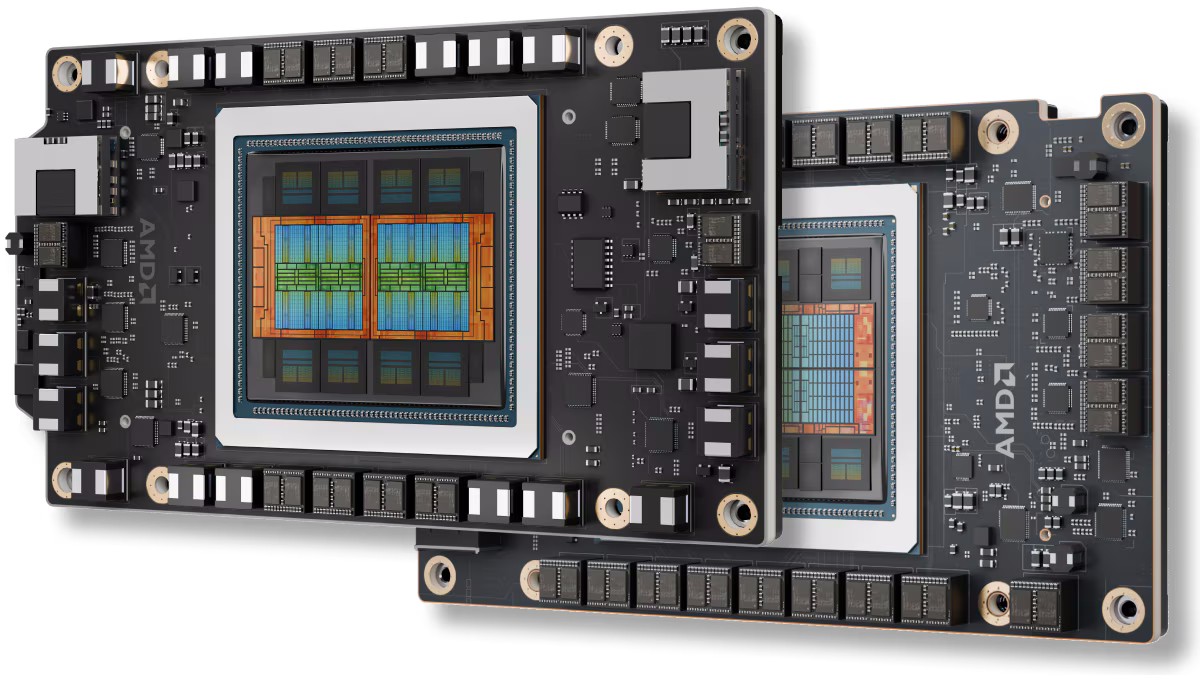

On the GPU side, ROCm 7 is also designed to take advantage of Radeon AI Pro R9700-class hardware. AMD claims as much as a 5.4x performance boost in the Wan 14b video generation model, highlighting how ROCm’s newer software optimizations can translate into real-world improvements for AI inference and content creation tasks.

Overall, the ROCm 7.2 update at CES 2026 signals a clear direction: broader hardware coverage, stronger Windows and Linux support, and a sharper focus on practical AI training and inference performance. For developers, researchers, and creators who want AMD hardware to play a bigger role in local AI workflows, ROCm is quickly becoming the software foundation that makes that possible.